Over a decade ago, I foresaw that Google might implement quality scoring for organic rankings and suggested various ways they could assess the quality of websites along with specific factors that might be essential for this. Recent core algorithm updates and the Medic Update over the past year, as well as the release of the Quality Rating Guidelines, suggest that a business’s reputation also plays a crucial role. If you’re curious about how this system might work, keep reading.

In 2007, I initially predicted that Google might introduce a Quality Score for organic search. In the following years, I emphasized the importance of aspects such as About Us pages for transparency, reliable Contact Us pages, good usability and user experience, copyright statements, and proper spelling and grammar. My predictions about these factors have proven accurate enough that I can still discern the direction in which the algorithm is evolving.

The latest version of Google’s "Quality Evaluators Guidelines," also known as "Quality Rating Guidelines" (QRG), almost mirrors my previous recommendations on quality factors.

Google Quality Rating Guidelines’ Relevance to Recent Updates

It is evident that quality factors, such as those detailed in the QRG, are increasingly influential in Google search rankings. Some might argue that I’m mistaken about the significance of the Quality Guidelines, as they’ve been extensively discussed since their release and Google representatives have stressed that the raters’ scores aren’t directly used in website rankings. Notably, Jennifer Slegg reported that Danny Sullivan confirmed on Twitter that the human evaluators’ ratings are not used in machine learning for algorithms, likening the rater data to feedback cards used by restaurants to gauge if their search “recipes” are effective.

Others have indicated specific elements in the Guidelines as possible signals, especially those related to Expertise, Authority, and Trust (E-A-T as Google calls them). For example, Marie Haynes pointed out that factors mentioned in the Quality Raters Guidelines might affect rankings, such as a business’s BBB ranking and authors’ reputations. While some criticized her for suggesting that BBB ratings and author reputations are direct ranking factors, John Mueller from Google clarified that they aren’t researching authors’ reputations nor using proprietary rating scores like the BBB rating.

At the same time, Google representatives have increasingly advised webmasters to "focus on quality" and recommended they familiarize themselves with the QRG to offer the best possible content. This approach was echoed by Danny Sullivan in his comments on last year’s core algorithm updates, suggesting webmasters read the QRG.

In an interview, Ben Gomes, Google’s VP of Search, Assistant, and News, explained that the rater guidelines serve as a vision for where the search algorithm should go, rather than detailing how the algorithm ranks results.

Determining Quality Algorithmically

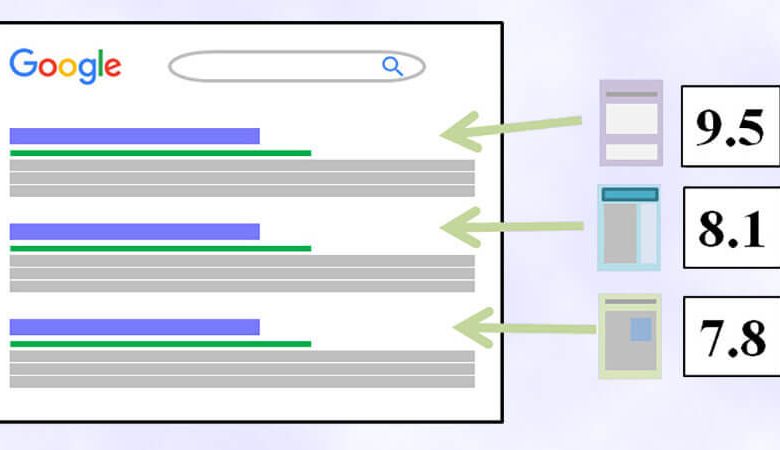

The question remains: How is Google algorithmically determining Quality using concepts such as expertise, authoritativeness, trustworthiness, and reputation? Google’s algorithms must translate these abstract notions into measurable criteria that can be contrasted between competing sites/pages.

I suspect some of Google’s past algorithmic developments indicate the process, revealing a potential disconnect between questions posed, responses from various Google representatives, and interpretations of those responses. Much speculation has seemed overly simplistic, focusing on naïve, theoretical elements that Google representatives have explicitly denied in some cases.

When Google instructs its human raters to assess a site’s E-A-T but doesn’t employ the resulting ratings, what is the algorithm using? Simply stating that the algorithm uses factors like a BBB rating, user reviews, or link-trust analysis seems overly simplistic. Similarly, suggesting that Google derives a quality assessment purely from link-trust and query analysis seems inadequate—Google clearly considers other factors beyond just these analyses.

Google’s Website Quality Patent

A past Google patent potentially outlines their strategy, possibly relying on machine learning. The patent, "Website Quality Signal Generation," described by Bill Slawski in 2013, details how human ratings could associate website signals with quality ratings, likely finding relationships between quantified signals and human ratings, and creating models based on these characteristic signals. This could allow comparison against unrated websites to assign a quality score. The patent language is intriguing:

"Raters (people) connect to the Internet to view websites and rate their quality. Raters submit website quality ratings to a quality analysis server, which stores ratings associated with web signals. The server identifies relationships between ratings and signals, creating a model of these relationships… The server also analyzes unrated websites for related signals to apply the model and calculate quality ratings, utilizing these to filter and order search results accordingly."

The patent gives examples of quality factors, like spelling and grammar correctness, obscenity presence, and completion of web pages, mirroring those mentioned in the QRG. The patent is compelling not for its specifics but its logical framework for evaluating website quality and generating a ranking-influencing Quality Score. The described methods indicate small test page sets could generate models applicable to varied page types.

For instance, an informational health article could have Quality Score factors like content volume, page structure, ad placements, external and internal linking, PageRank, content relevance, query engagement, and positive assessments—coalescing into a machine-learned model for assessing quality without human evaluation. Different page types likely have corresponding models.

Leveraging Machine Learning

The potential inclusion of machine learning in Google’s process could recognize more nuanced data relationships than manual signal weighting. Instead of setting hard rules, machine learning might establish complex quality scores based on dynamic, intricate relationships among PageRank, user engagement, page layout, and other signals.

The patent details possibilities like using a machine learning subsystem to analyze website signals and ratings, resulting in a machine learning classifier. This model aids in identifying pages of a similar class and allocating quality scores, standardizing assessment across similar page types.

Whether a learning system processes data directly from pages or refines it with manual interventions, Google could apply varied "models" for page types, checking them against quality raters’ assessments and adjusting weightings. With Google’s computational resources, this makes more sense than manual valuation. Other SEO analysts, like Eric Enge and Mark Traphagen, also believe Google employs machine learning beyond query interpretation.

This potentially elucidates why Google advises vague changes for addressing ranking drops post-core algorithm adjustments. Sophisticated scoring through vector machines or neural networks could obscure contribution factors, making it complex to pinpoint any specific signal or combination beyond general guidelines.

Once incorporated into rankings, additional evaluator feedback refines and iterates results for progressive improvement.

Potential Complex Quality Signals

Several possible Quality Score factors incorporate complexity:

- PageRank involves link quality assessment.

- User Reviews Sentiment indicates threshold-based review consideration, informed by Google’s previous sentiment analysis and potential reputation impacts.

- Mentions Sentiment could consider product mentions in social media and other communication.

- Click-Through-Rate (CTR) influences, as discovered in past observations. It might contribute to Quality Score rather than directly impact rankings.

- Advertising Intrusiveness assesses ad proportions and disruptiveness in page layouts.

- Identification Transparency evaluates About/Contact page presence, encouraging staff identification and comprehensive contact details.

- E-Comm & Financial Security concerns extend beyond HTTPS, considering certificate robustness and visible policies.

- Page Speed remains a relevant ranking factor.

- Regular Reviews/Updates address content freshness and accuracy, especially in dynamic fields like medical or financial information.

- No-Content/Worthless Pages can negatively impact perceived site quality.

- Malicious/Objectionable Content immediately detracts from quality.

PageRank Score Calculation Methodology

Quality Score computation may also reflect the Quality Scores of linking pages/domains, potentially iterating across the link graph akin to the PageRank algorithm. This reinforces premium content visibility and relegates low quality, improving search result quality.

Medic Update Site Observations

A review of sites affected by the Medic Update, per Barry Schwartz’s list, reveals deficiencies in criteria linked to quality factors noted in the QRG, such as contact transparency and effective content segmentation. However, superficial fixes might not fully resolve a site’s combination of quality concerns.

Navigating Mixed Signals Over Ranking Factors

Controversy surrounds some potential signals. Disagreement persists over some assertions. I believe Google’s communications have often exploited semantics. They might deny certain factors (CTR, sentiment analysis) as direct ranking influences when involved integrally in a broader Quality Score assessment that does influence rankings. For example, high CTR alone may not suffice without complementing quality signals, preventing straightforward factor retracing.

"Model" or quality patterns likely differ across page ratings, aligning closely with a "search recipe" metaphor by Danny Sullivan. Varied factor combinations/mappings likely determine different page types’ Quality Scores.

Use of support vector regression or neural networks obscures reverse-engineering Quality Scores due to holistic content evaluation. Google’s focus on generalized quality improvement advice stems from supporting comprehensive content optimization across factors, as Glenn Gabe observed.

Enhancing Site Quality Score

Understanding Quality Score complexity aids ranking improvement:

Fundamental SEO practices remain applicable. Prioritize technical SEO hygiene, minimizing errors and no-content pages. Build comprehensive site documentation (About, Contact, Privacy, Terms & Conditions) and ensure quality content alignment with user needs across updated and technically optimized pages.

Strive for excellent usability, customer service, and content. Engage critical evaluators for feedback. Maintain community engagement online and offline, supporting positive reputation metrics over time. Engage positively with social and professional communities, offering free expertise.

These strategies generate favorable signals for a good Quality Score, eventually benefiting rankings.