John Mueller from Google advises that content needs to be in HTML for it to be indexed quickly. This is particularly relevant for sites that regularly produce new or updated content. Mueller shared his insights during a Twitter discussion about Google’s two-pass indexing system.

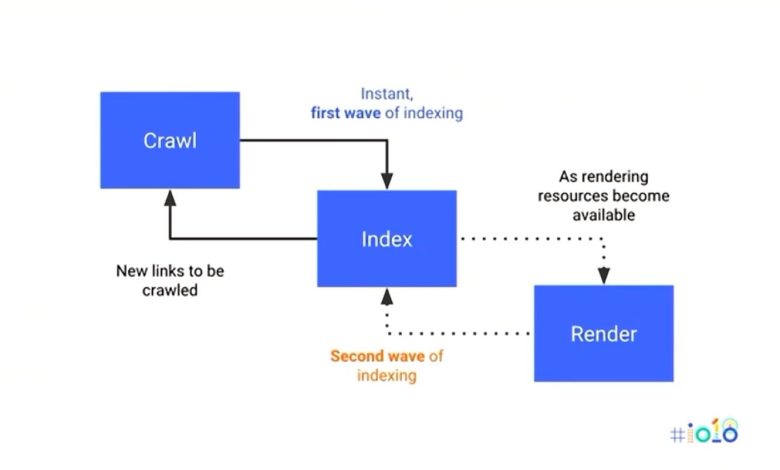

When Google crawls and indexes content, it does so in two passes. The first pass only considers HTML, and a later second pass reviews the entire site. Mueller notes that there is “no fixed timeframe” between these passes. It can happen promptly in some situations but may take days or weeks in others.

Mueller emphasized that if your site frequently updates content and you want quick indexing, the content must be in the HTML.

This consideration is crucial for SEO on web pages using heavy client-side JavaScript rendering. Some content might be missed during the first pass of a JavaScript-heavy page, requiring a second pass for complete indexing, which could take weeks.

Thus, content might not be fully indexed in Google Search until weeks after publication. This delay is less than ideal, highlighting the importance of Googlebot seeing the main content during the first pass.

Adding to the conversation, veteran SEO Alan Bleiweiss mentioned he recently audited a site that suffered significantly after switching to all client-side JavaScript rendering on critical pages.

Googlebot does not crawl an entire page at once due to resource limitations. Rendering JavaScript-powered pages demands processing power and memory, which are not infinitely available to Googlebot. When a page includes JavaScript, rendering is postponed until Googlebot can allocate the necessary resources.

A page might get indexed before rendering is complete, and the full rendering might occur later. Once rendering is finalized, Google conducts a second round of indexing on the rendered content. For more in-depth discussion on making JavaScript-powered websites search-friendly, see the detailed talk from Google I/O.